Output

In a similar manner as the "Data" group allows managing sources, endpoints in this group allow different outputs to be registered, listed and deleted.

Once an output has been registered, it can be attached to a single active process. Four types of output are available:

rtsp: a processed video feed through RTSP protocol. The feed can be consumed directly, as the API acts as a server.rtmp: a processed video feed through RTMP protocol. The feed must be consumed by a server on the user's side.detection_data: a stream of detection metadata in json format through a websocket connection. This output has a simpler integration workflow, as it does not need to be pre-registered, and becomes automatically available when a process starts. A sample server for reading this data stream is also provided below.drone_data: a stream of the telemetry data received in the drone through a websocket connection. The type and the url must be provided when registering an output. URL's header must be in accordance with communication protocol (RTMP/RTSP). Each output has type has its own requirements, which are detailed below.

Output types

RTMP Output

When registering the RTMP Output (POST /output), the URL must contain the IP address and Port of the server to which you are streaming, as well as the path of the stream on that given server, i.e., rtmp://1.2.3.4:1935/output_rtmp_stream. The body for such a request would be as follows:

{

"alias": "Correct RTMP",

"type": "rtmp",

"url": "rtmp://1.2.3.4:1935/output_rtmp_stream"

}

This output requires a server on the user's side. There are multiple open source and paid solutions available for setting up such server, a popular one being media-mtx.

RTSP Output

The rtsp output differs from the rest video outputs. When registering the RTSP Output (POST /output) the URL must contain only the path of the stream, no IP nor PORTs will be given, i.e. "rtsp://output_rtsp_stream". This output does not require a server on the user side, an ingestable RTSP stream will be provided by our end.

Sample body for the RTSP Output:

{

"alias": "Correct RTSP",

"type": "rtsp",

"url": "rtsp://output_rtsp_stream"

}

The ingestable stream created when attaching a RTSP output to an active process (see Workflow), would be as follows for the sample body provided: rtsp://senseaeronautics.com:8554/{username}/output_rtsp_stream

Detection Data Output

This output provides a stream of detection metadata in json format. It is the more lightweight output, as it does not require a video stream, and enables a more advanced integration with the API. It is available via WebSocket. This output will always be available and does not have to be registered nor set up by the user.

The WebSocket can be accessed at wss://{product}.senseaeronautics.com/process/{process_uuid}/detection_data, and only allows for a single client. If a connection with a client is created, no new connections will be allowed until the current connection is closed.

This script illustrates how messages can be received and decoded on the client side.

Message format

The detection data message follows the format:

{

"timestamp": "2024-05-04T08:26:15.123Z",

"source_uri": "rtsp://input.stream:1234",

"process_uuid": "3f2b7a70-9c4b-4a6a-a28d-3b2a4a4f3c13",

"active_tracks":

[

{...track_1...},

{...track_2...},

...

]

}

Timestamp is given in Zulu time.

Each track follows the format:

{

"track_id": 0,

"class_id": 1,

"class_name": "vehicle",

"bbox": [263.0, 115.0, 14.0, 9.0],

"scaled_bbox": [0.20546875, 0.1597222222222222, 0.0109375, 0.0125],

"confidence": 24,

"latitude": 42.231233,

"longitude": -8.333333,

"altitude: 720,

"distance: 50,

"azimuth: 20,

"elevation: 10

}

Fields are the following:

timestamp: Detection timestamp in Zulu time.source_uri: Input stream URI.process_uuid: UUID of this active process.active_tracks: List of active tracks on stream.

Each active track has the following keys:

track_id: The ID assigned to a tracked detection. Takes value 0 when tracking is not enabled.class_id: The ID of the class of the detection.class_name: The name of the class of the detection.bbox: The bounding box of the detection in the original resolution of the input video. The format is[x, y, width, height].scaled_bbox: The bounding box of the detection given as a percentage of the input resolution on each axis. The format is[x, y, width, height].confidence: The confidence of the detection between 0 and 100%.latitude: Geographic latitude coordinate of the detection.longitude: Geographic longitude coordinate of the detection.altitude: Altitude of the detection in meters (ASL).distance: Distance from the observer to the detected object (meters).azimuth: Azimuth angle relative to the drone vision's line (degrees, positive above center of the image)elevation: Elevation angle relative to the drone vision's line (degrees, positive above center of the image)

Track report

This output allows retrieving information about detected tracks over the last few minutes. This frees an operator from having to keep full attention on each detection stream or an integration from keeping a constant connection to the ' /detection_data` websocket. It also enables the generation of reports with a summary of detected tracks with information regarding the first and last known location, detection times and a thumbnail for visual reference.

To check if this feature is enabled for your API Key, use the endpoint GET /user_info (see more).

The track report for any finished process can also be obtained as long this feature was enabled when the process started. To get a list of processes with an available track report, use GET /tracked_processes. If you wish to delete the report for a given process, use DELETE /process/{process_uuid}/tracks.

The query is done through the endpoint GET /process/{process_uuid}/tracks. The response is in a list of tracks, each of which is a JSON object with the following fields:

track_id: The ID assigned to a tracked detection.category_id: The ID of the category of the detection.category_name: The name of the category of the detection.bbox: The bounding box of the detection in the original resolution of the input video. The format is[x, y, width, height].list_confidences: A list with the confidences of all detections, each one between 0 and 100%.max_confidence: The maximum confidence registered for the track.min_confidence: The minimum confidence registered for the track.image_patches: A list with a dictionary for each patch. Generally, only the patch which best represents the detection is provided. Each dictionary has info about the image in b64 format, the confidence and the timestamp of that patch.mean_confidence: The average confidence registered for the track.timestamps: List with a history of detection timestamps for the track in POSIX format.is_live: If a track is live or not at the moment of the query.first_seen: Time when the track was detected for the first time.last_seen: Time when the track was detected for the last time.latlon_history: List with a history of detection coordinates (latitude, longitude) for the track.

An example message would look like this:

{

"process_uuid": "7bf9a90d-0831-4dd1-aa20-fa387ca5c93a",

"report_time": 1746000206.487046,

"time_window_minutes": 5,

"tracks": [

{

"track_id": 1,

"class_id": 0,

"bbox": [ 447, 625, 478, 655],

"list_confidences": [ 80, 80, ...],

"max_confidence": 81,

"min_confidence": 79,

"mean_confidence": 79.90,

"image_patches": [

{

"image": "/9j/4A...",

"confidence": 87,

"timestamp": 1746000194.7368646

}

],

"timestamps": [

1746000192.8733895,

1746000206.3574142

...

],

"first_seen": 1746000192.8733895,

"last_seen": 1746000206.3574142,

"latlon_history": [ (42.3460864,-7.8610432), (42.3460864,-7.8610432), ... ]

}

]

}

If the track report has been enhanced as per Track Report Enhancement, this will include an additional field enhanced_data with the new information.

Drone data output

This output provides the telemetry obtained in our system in json format. It's avalaible via WebSocket on wss://{product}.senseaeronautics.com/process/{process_uuid}/drone_data. The same script avalaible in the section before can be used to read the drone data in the client side. An example:

{

"timestamp": 1231234,

"latitude": 37.7749,

"longitude": -122.4194,

"speed": 15.2,

"drone_pitch": -5.3,

"drone_yaw": 120.0,

"drone_roll": 2.1,

"sensor_hfov": 90.0,

"sensor_vfov": 60.0,

"camera_pitch": -3.5,

"camera_yaw": 118.5,

"camera_roll": 0.0,

"altitude": 150.7,

"rel_altitude": 30.5

}

Where the fields are:

timestamp: Detection time un Unix epoch/time.latitude: Geographic latitude coordinate.longitude: Geographic longitude coordinate.speed: Speed of the drone.drone_pitch: Drone inclination (pitch) in degrees.drone_yaw: Drone orientation (yaw) in degrees.drone_roll: Drone lateral rotation (roll) in degrees.sensor_hfov: Sensor horizontal field of view in degrees.sensor_vfov: Sensor vertical field of view in degrees.camera_pitch: Camera inclination (pitch) in degrees.camera_yaw: Camera orientation (yaw) in degrees.camera_roll: Camera lateral rotation (roll) in degrees.altitude: Absolute altitude (ASL) in meters.rel_altitude: Relative altitude in meters.

Output resolution

By default, outputs will have the same resolution as the associated input (outputs with this configuration will have their settings set to "null"). Different resolutions can be set by providing the width and height in the settings field, as follows:

{

"alias": "Different resolution RTSP",

"type": "rtsp",

"url": "rtsp://output_rtsp_stream",

"settings": "720p"

}

Available resolutions are: 360p, 720p and 1080p.

This additional key can be added to any of the outputs, though it only makes sense on video outputs: detection_data being a stream of json data, does not have a resolution. As explained before, bounding boxes are always given with respect to the original resolution of the input video. Thus, this key is just ignored for detection_data outputs.

This allows configuring different resolutions for the output stream. For details on the workflow for enabling an output, see Workflow.

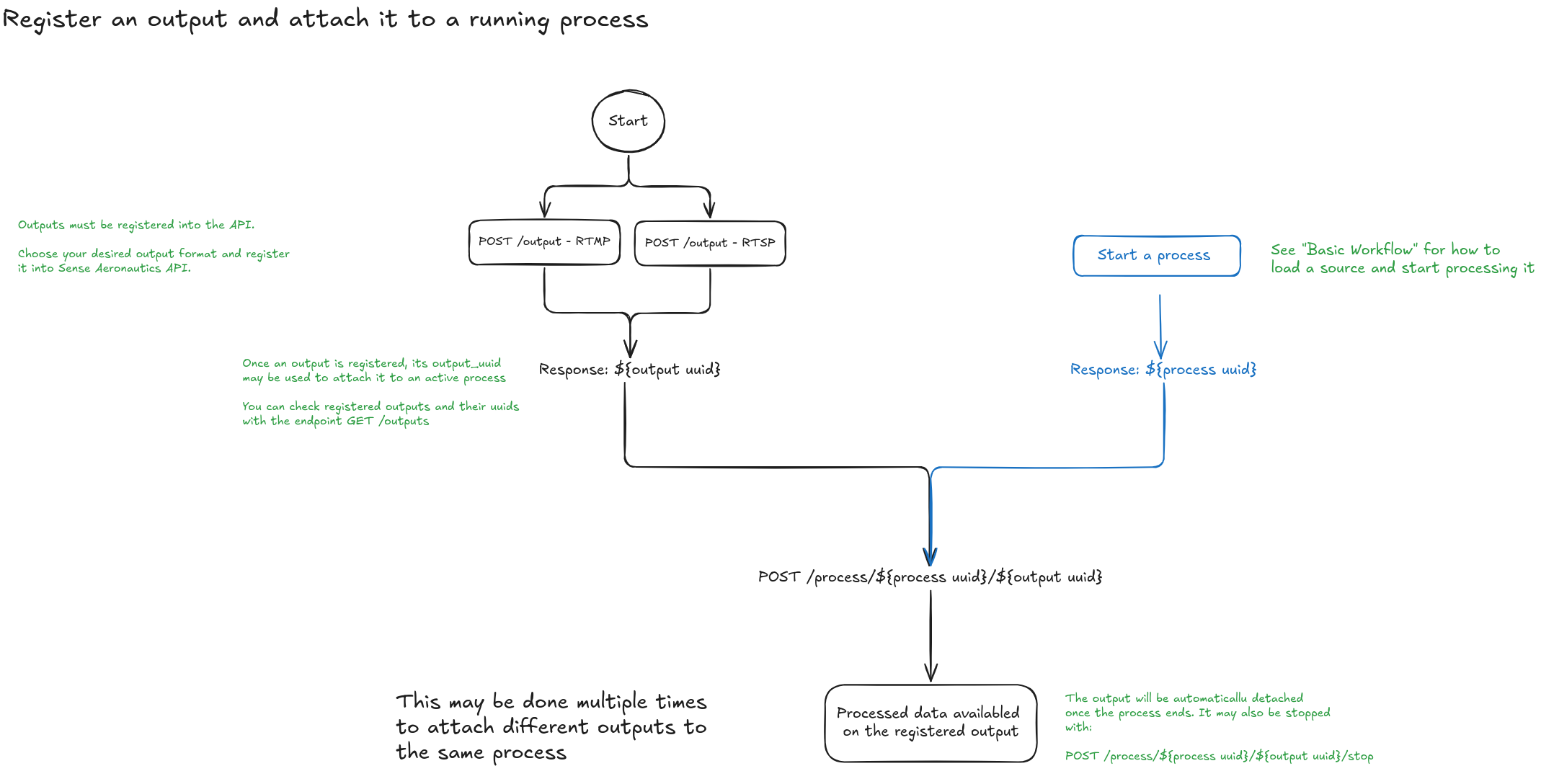

Workflow

The workflow to use an output is was already outlined in the docs introduction, but it can be summed up in the following steps:

- Start your process, which will have a unique

process_uuid. - Post a new output, as explained in the previous section or pick an existing one. This will give you an

output_uuid. - Attach the output to the running process with

POST /process/{process_uuid}/{output_uuid}

Remember this workflow does not apply to websocket data stream, which is readily available as soon as the process starts.

A flux diagram of attaching an output to a process is shown in the following image:

Note multiple outputs may be attached to the same process in order to visualize ir on different resolutions, protocols and formats.

Extra: streaming response output

There is an additional endpoint in the output group: /feed, which provides a basic http stream of the processed frames.

It is not optimized for performance or integration, but it is useful for quick testing, and thus is provided in the response when a process is started.